Watt Matters in AI: in search of energy-efficient AI

In anticipation of the "Watt Matters in AI" conference, IO+ describes the current situation, the societal needs, and the scientific progress

Published on April 19, 2025

Bart, co-founder of Media52 and Professor of Journalism oversees IO+, events, and Laio. A journalist at heart, he keeps writing as many stories as possible.

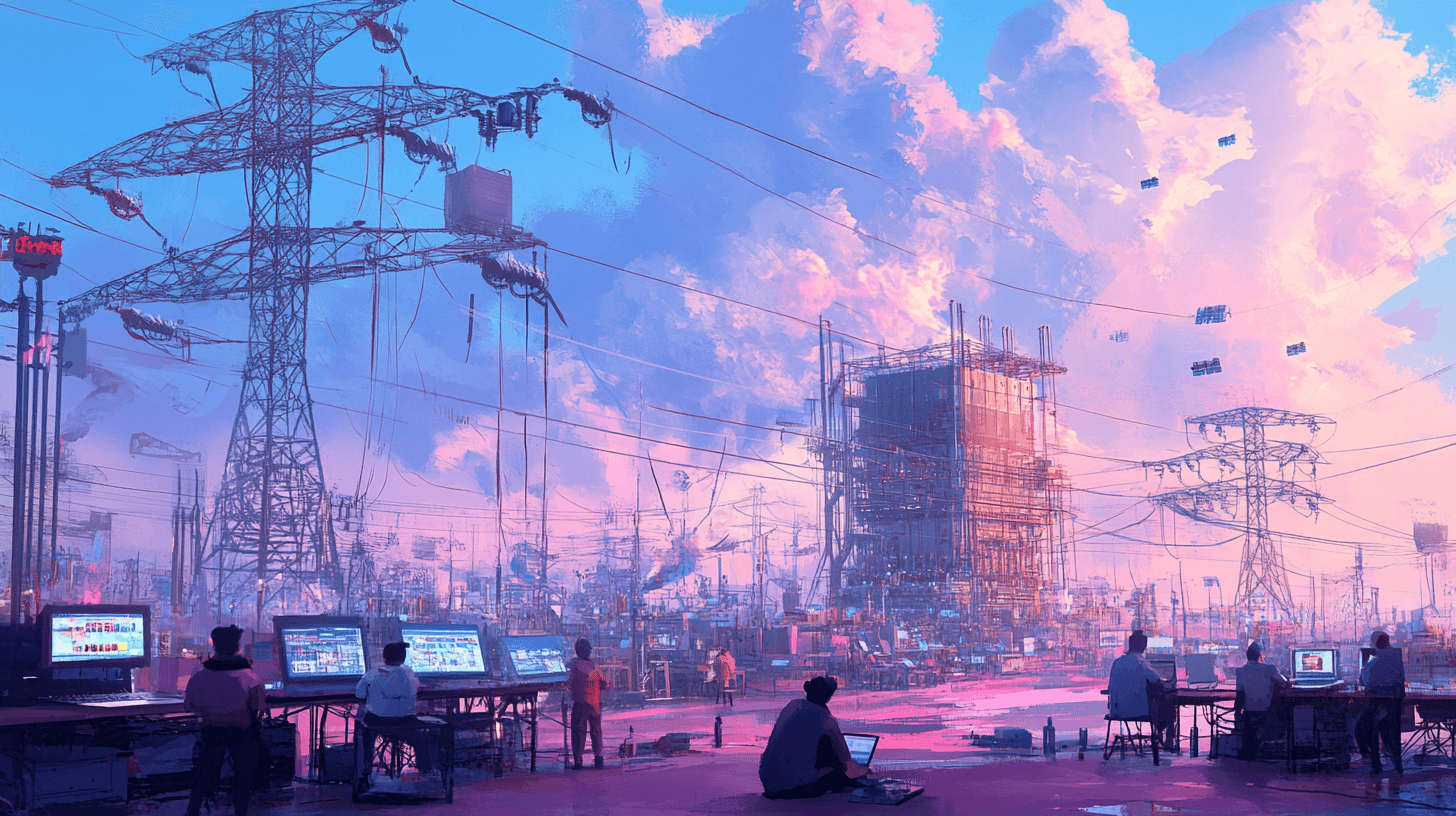

Watt matters in AI.

Artificial Intelligence (AI) can address all sorts of societal problems while helping to achieve the Global Development Goals. However, the anticipated massive use of AI will severely strain energy resources, creating new problems. To master this conundrum, new paradigms are required at multiple levels, ranging from innovative hardware solutions to regulations governing the use of AI, and everything in between. This goes far beyond the ongoing improvements made with present-day solutions - we need a holistic approach leading to radical breakthroughs.

That is why IO+ is co-organizing a conference on November 26 to explore the potential of these new paradigms, providing a basis for AI with vastly improved energy efficiencies. Recent developments in AI will be reviewed, and promising upcoming concepts, such as brain-inspired neuromorphic computing, physics-based computing, and approximate computing, will be addressed.

In anticipation of this conference, IO+ publishes a series of articles that explain the challenge and focus on potential solutions. This is the first article in that series. Watt really matters in AI; bringing multidisciplinary experts together at this conference can help establish an ecosystem that pushes innovations to new levels. Secure your early-bird ticket here.

Watt Matters in AI

Watt Matters in AI is an initiative of Mission 10-X, in collaboration with the University of Groningen, University of Twente, Eindhoven University of Technology, Radboud University, and the Convention Bureau Brainport Eindhoven. IO+ is responsible for marketing, communication, and conference organization.

More information on the Conference website.

Potential gains in energy efficiency

AI’s growing energy footprint has sparked intense research into more sustainable computing methods. Over the past year, progress in both hardware and software has shown that substantial gains in energy efficiency are possible – often by taking cues from nature (brain-inspired chips, neuromorphic memristors), exploiting the laws of physics (photonic and analog computing), and intelligently trimming the fat from today’s AI models (pruning, quantization, approximate computing). These approaches are highly complementary: the ultimate energy-efficient AI systems will likely combine efficient algorithms running on specialized low-power hardware. Encouragingly, many of the advances highlighted are open-access, accelerating knowledge sharing in this fast-moving field.

As we advance, we can expect even tighter co-design of models and hardware, more emphasis on “Green AI” metrics in research, and broader adoption of these efficiency techniques in industry. By continuing on this path, the community aims to reconcile AI’s rapid growth with the practical need for energy-conscious computing, enabling the benefits of AI while minimizing its environmental and economic costs. But obviously, this will take time.

Modern artificial intelligence models have achieved remarkable capabilities at the cost of enormous computational and energy demands. Training and deploying large neural networks can require gigawatt-hours of electricity, contributing to significant carbon emissions. For example, generative AI systems may consume up to 33× more energy to perform a task than conventional software. Projections indicate that without efficiency improvements, the electricity used by AI could more than double from ~460 TWh today to ~1000 TWh by 2026. This escalating energy appetite has spurred a wave of research into making AI more energy-efficient.

Hardware and software

Researchers are tackling the problem on several fronts. The most striking examples are hardware innovations (creating low-power, brain-inspired computing devices and accelerators), software innovations (developing algorithms and techniques to reduce the computational cost of AI models), and behavioral changes (ethical frameworks, corporate responsibility, user involvement, policy development, and public awareness). Over the past year, for example, significant progress has been made in areas such as neuromorphic computing, analog and photonic processors, approximate computing, model compression (pruning and quantization), energy-aware neural architecture design, and specialized AI chips.

Many of these advances have been published in leading journals and conferences and are available as open-access articles. In the following three articles in this series, we provide an overview of recent research (2024-2025) illustrating strategies for improving the energy efficiency of AI, both for hardware and software, as well as for more behavior-driven adaptations.

Watt Matters in AI

Watt Matters in AI is a conference that aims to explore the potential of AI with significantly improved energy efficiency. In the run-up to the conference, IO+ publishes a series of articles that describe the current situation and potential solutions. Tickets to the conference can be found at wattmattersinai.eu.