Stop scaling cloud chips, Axelera AI warns: purpose-built AI wins

The edge AI market is booming, but deployments stall in "pilot purgatory." The fix? Radical silicon that eliminates the data bottleneck.

Published on November 4, 2025

Fabrizio del Maffeo, © Axelera AI

Bart, co-founder of Media52 and Professor of Journalism oversees IO+, events, and Laio. A journalist at heart, he keeps writing as many stories as possible.

The AI revolution at the enterprise level is undeniable, yet the promise of AI inference at the edge - on a camera, a factory robot, or a city traffic light - has largely remained a proof-of-concept pipe dream. Companies get stuck in a state dubbed "pilot purgatory," unable to scale from the lab bench to mass production.

According to industry disruptor Axelera AI, the reason is a fundamental infrastructure mismatch. "We have spent years trying to shoehorn silicon designed for hyperscale cloud data centres or low-power mobile phones into demanding, real-world edge applications. The result is hardware that looks great on a spec sheet but buckles under pressure." At last week's AI Beyond The Edge forum, Axelera AI CEO Fabrizio Del Maffeo addressed this head-on: "There's a massive disconnect between what everyone's talking about and what's actually working in the real world." Del Maffeo's diagnosis: "Everyone's trying to shove cloud chips or mobile processors into edge applications. The underlying architecture just wasn't built for this job."

Ionnis Papistas is one of the keynote speakers at the Watt Matters in AI Conference. Secure your tickets here.

Industrial customers face a crippling choice: high-end GPUs that consume so much power they can add hundreds of euros to monthly electricity bills per deployment, or adapted hardware that thermally throttles and fails when deployed in hot, constrained factory or retail environments. For smart city planners dreaming of analyzing 4K/8K video streams, the ROI calculation with traditional GPU solutions has simply been a non-starter; the cost of infrastructure and cooling is prohibitive, rendering necessary public safety and traffic analysis projects aspirational rather than viable.

This failure isn't a problem of poor model design or capability, Del Maffeo claims. It’s a design flaw in the very foundations of the compute architecture being used.

The Von Neumann Bottleneck

To understand why traditional hardware fails at the edge, you have to look at how neural networks actually operate. Whether processing video, speech, or language, AI inference spends 70 to 90 percent of its time on matrix-vector multiplications.

In a traditional Von Neumann architecture, the processor and the memory are separate. This means every time a calculation is needed, data must be constantly shuttled back and forth across the chip. This energy, dedicated to data movement (not actual computation), becomes the primary power sink. On the edge, where every milliwatt and millisecond matters, this architecture is grossly inefficient.

The radical shift delivered by companies like the Eindhoven-based Axelera AI lies in its Digital In-Memory Computing (D-IMC) architecture. This is a purpose-built solution that places the memory and compute elements directly adjacent, on the same block.

By performing calculations right where the data is stored, D-IMC dramatically reduces data movement, eliminating the bottleneck and unlocking superior performance. This isn't about creating the fastest chip overall; it's about being optimal for the high-throughput, low-latency matrix-vector operations that define modern AI workloads. This efficiency allows their Metis AI Processing Unit (AIPU) platform to deliver competitive Tera Operations Per Second (TOPS) within extremely low power budgets (e.g., 4-8 watts), maintaining performance consistency that adapted chips simply cannot match under sustained load.

Where architecture meets economics

When the hardware architecture finally aligns perfectly with the workload requirements, applications that were technically feasible in a clean lab suddenly become economically practical in the messy world. This shift is already making four critical use cases viable:

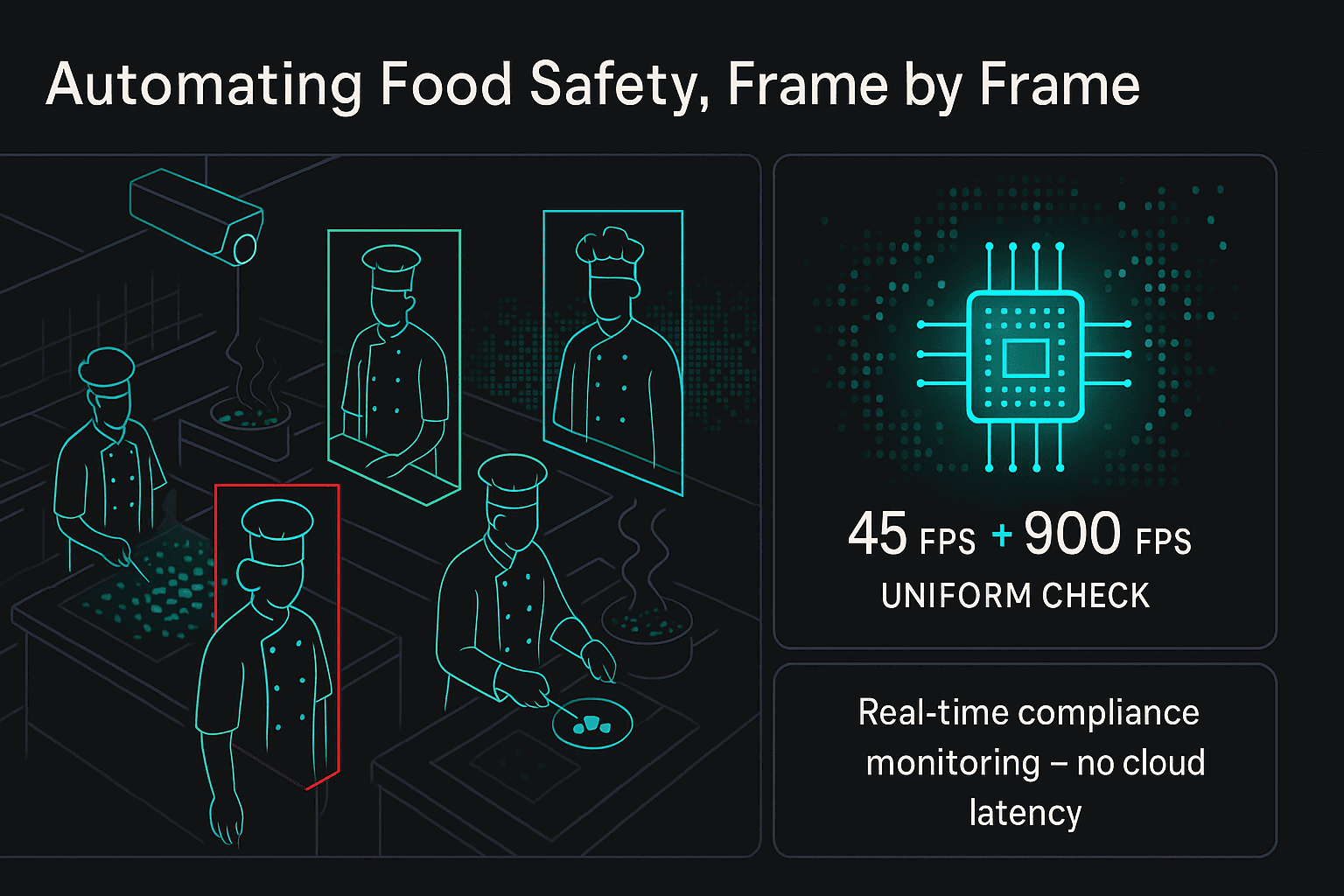

1. Food Safety & Kitchen Monitoring

The challenge in food service is verifying uniform compliance (hats, gloves, etc.) for dozens of employees in real-time without hiring dedicated staff. Previous systems were too slow or too complex. Axelera’s architecture enables simultaneous, high-speed processing on a single edge device: 45 FPS for person detection plus an astonishing 900 FPS for uniform verification. This dual-stream efficiency automates food safety compliance effectively, functioning robustly in the high-heat, high-traffic conditions of a commercial kitchen.

2. High-Speed Seed Sorting in Agriculture

Precision agriculture demands extreme speed. The entire cycle - image capture, AI processing, and mechanical actuation decision - must be completed in a mere 4 milliseconds. High-end consumer GPUs, such as the Nvidia RTX 4080, required 2.3ms just for the AI processing alone, failing the requirement. The purpose-built Metis chip cuts AI processing time to 1.2ms, making the entire use case viable with ample margin for mechanical components. This throughput directly justifies the high capital investment in seed and produce sorting equipment.

3. Resilient Manufacturing Quality Control

Factory floors require quality control systems to run multiple complex inspection models (e.g., surface defect detection, assembly verification) simultaneously across several production lines. Power draw and thermal management are major issues. The Metis platform enables consistent, parallel processing of multiple camera feeds without the thermal throttling common to adapted solutions. The business impact is immediate and quantifiable: a 30% reduction in quality issues, coupled with a 50% reduction in inspection costs compared to outdated manual processes.

4. 4K/8K Smart City Applications

The core obstacle in smart city deployment is processing massive 4K and 8K video streams from multiple cameras for public safety, traffic flow analysis, and tracking. The multi-core D-IMC architecture and a robust SDK handle these high-definition streams with ease, eliminating the need for prohibitive, power-hungry centralized infrastructure. For municipalities, this changes the equation entirely, shifting the financial model to one with a viable ROI, making ambitious urban deployment goals practical rather than merely aspirational.

The gap between AI potential and deployment is not a software problem, but a hardware one. For enterprises ready to scale beyond the pilot phase, the strategic choice is no longer about buying the fastest chip, but the optimal architecture.

Watt Matters in AI

Order your tickets for Watt Matters in AI Conference (26 November Eindhoven) here: wattmattersinai.eu