No escape: AI is everywhere - and what that means for the planet

Even if you want to avoid using AI, you probably can’t. The rising energy costs are worrying, an MIT Technology Review roundtable showed.

Published on May 23, 2025

Bart, co-founder of Media52 and Professor of Journalism oversees IO+, events, and Laio. A journalist at heart, he keeps writing as many stories as possible.

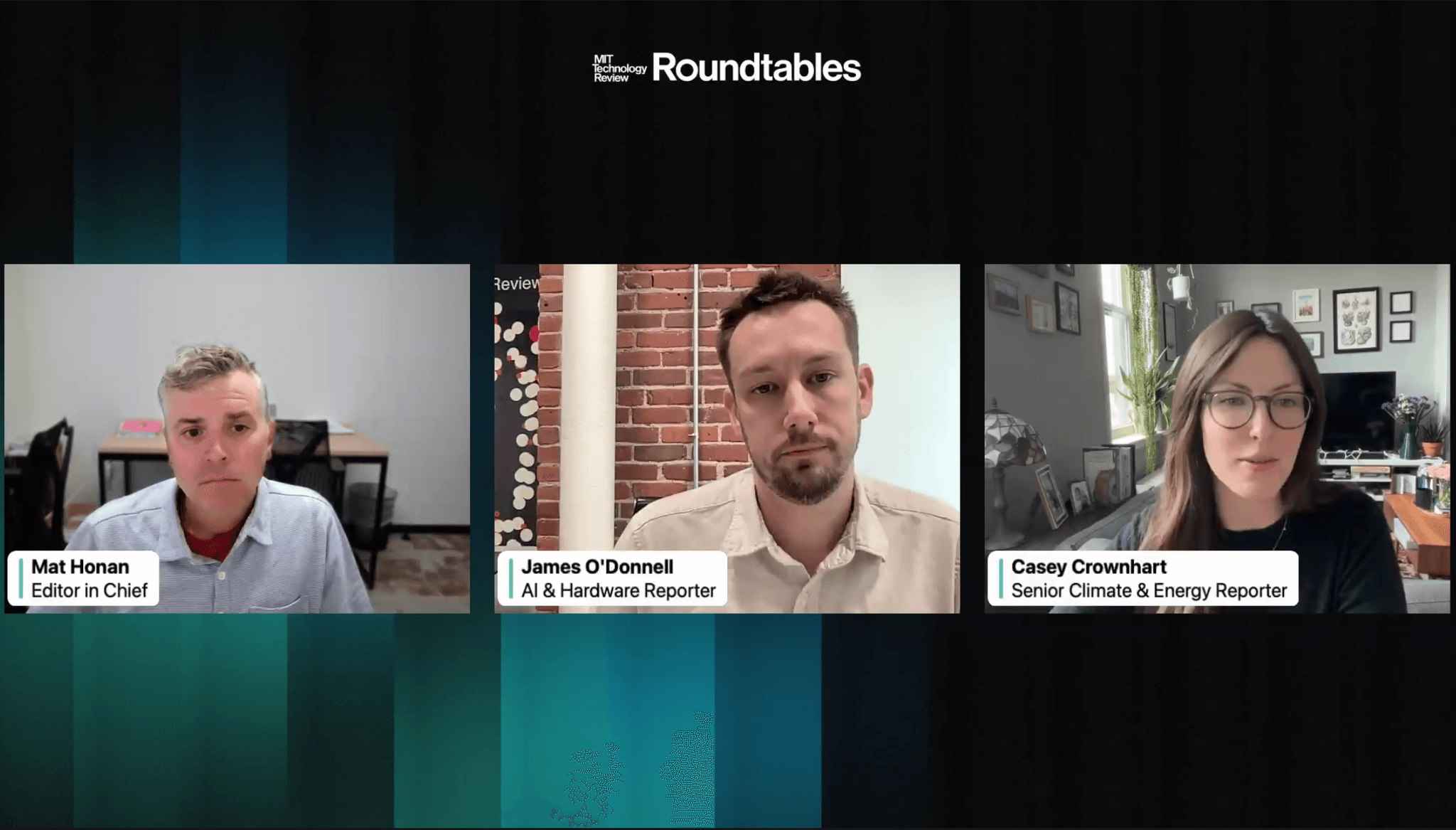

By the time you read this, you’ve probably already interacted with AI - whether you meant to or not. That’s one of the central insights from a recent MIT Technology Review roundtable, where Editor-in-Chief Mat Honan spoke with reporters James O’Donnell and Casey Crownhart about the mounting impact of AI on our energy systems and the growing impossibility of opting out. The conversation was part of Technology Review's series on AI and our Energy Future.

“People say, ‘Well, we can just choose not to use this stuff,’” O’Donnell noted. “But that’s not going to be a choice for many of us in the coming years.” AI is increasingly embedded in everything — from customer service bots to productivity tools and fitness apps. In other words, even if you don’t want to use AI, it’s likely already using you.

Watt Matters in AI

Watt Matters in AI is an initiative of Mission 10-X, in collaboration with the University of Groningen, University of Twente, Eindhoven University of Technology, Radboud University, and the Convention Bureau Brainport Eindhoven. IO+ is responsible for marketing, communication, and conference organization.

More information on the Conference website.

From training to inference: a shift in the energy story

Much of the public concern about AI's environmental impact has focused on the energy required to train large models like ChatGPT. That process involves feeding vast amounts of data into enormous computing clusters, often over weeks or months.

But the bigger story now, say O’Donnell and Crownhart, is what happens after training: inference — the real-time process of responding to user queries. “Inference is where the bulk of energy use is happening now,” O’Donnell said. “Researchers told us that 80 to 90% of the electricity consumed by AI models today goes into inference, not training.”

And inference doesn’t rest. “It’s 24/7,” Crownhart added. “It’s always on, always answering something somewhere. You can’t shift it to the sunniest part of the day to use solar — it happens whenever users make requests.”

Small queries, big footprints

How much energy are we talking about? That depends on what you're asking AI to do.

Using open-source models — because big tech companies declined to share data — the team found that a typical text query consumed about as much electricity as running a microwave for 8 seconds. Not much, until you multiply it by hundreds of millions of daily users. Image generation, surprisingly, often used less than text, depending on the model size.

But video? That’s where things skyrocket. Generating a single five-second video clip could use 700 times the energy of generating an image.

“If you’re casually creating AI-generated videos just for fun, the energy cost is substantial,” O’Donnell said.

Where you are matters

Another surprising finding: the location of the data center handling your query has a major impact on the associated carbon emissions.

Even if two queries use the same amount of electricity, their environmental footprint varies depending on the local grid’s energy mix. “A query processed in coal-heavy West Virginia will have a much larger carbon footprint than one handled in solar-rich California,” Crownhart explained.

Watt Matters in AI

Watt Matters in AI is a conference that aims to explore the potential of AI with significantly improved energy efficiency. In the run-up to the conference, IO+ publishes a series of articles that describe the current situation and potential solutions. Tickets to the conference can be found at wattmattersinai.eu.

That’s because carbon intensity — the emissions per unit of electricity — fluctuates widely across regions and even by time of day. But users don’t know where their queries go. “Companies don’t disclose the locations of their data centers,” she said. “So we can’t track the environmental impact of individual actions.”

The team also flagged a lesser-known but equally critical issue: water consumption. Data centers use massive amounts of potable water to cool overheating GPUs. In water-scarce regions like Arizona or parts of Spain, that adds up quickly, often millions of liters per day per site.

And yes, most of that water evaporates and doesn’t return to the system.

What can you do?

Should users feel guilty about asking a chatbot to write a haiku?

Not exactly, the panelists said. While individuals can make choices, such as avoiding video generation or keeping prompts concise, the bigger issue is corporate transparency.

“None of the major AI companies we contacted were willing to share energy use data,” Honan emphasized. “Yet they’re asking us to commit public energy infrastructure to something whose footprint they won’t even disclose.”

Instead of focusing solely on personal responsibility, the journalists urge public pressure on companies to be more transparent and accountable, and for policymakers to consider energy and water use when regulating AI expansion.

A fork in the road

The team concluded with two critical takeaways. First: AI’s growing energy demands are not just part of general digital expansion - they represent a specific, sharp inflection point. “From 2005 to 2017, data center energy use stayed flat. Now, it’s spiking, because of AI,” said O’Donnell.

Second: The future is still being written. “Everyone wants one number for AI’s footprint,” said Crownhart. “But it varies depending on the model, the query, the time, and the grid. That means we still have choices about how this plays out, especially on the energy side.”

As AI becomes inescapable, our choices about transparency, infrastructure, and efficiency will determine whether this new layer of digital life also becomes an environmental burden we can’t escape.